I got ChatGPT to implicate its creators

A machine programmed to absolve its developers, blame its end users, and trick us into skipping an ethical debate.

I am deviating from my release schedule to bring you a conversation with ChatGPT, a next-generation AI that provides shockingly coherent responses to almost any sort of query.

If you’re like me, you’ve probably seen a million ChatGPT conversations on social media in the last week. I promise that this one is worth your time.

ChatGPT is a product created by human beings, and its very existence will undoubtedly have major effects on the job market, on the shape of the internet, and on education at all levels. The AI is trained to answer most questions—but its creators limited it in two important ways:

It won’t answer questions where it thinks the answer will be morally problematic.

It won’t assess whether it itself is morally problematic.

These two limitations come to a head in the following conversation, which exposes the fact that the AI’s moral neutrality is nothing but a stance programmed into it by its human creators, who are shielding themselves from moral responsibility by programming the AI to insist that it is us, the end users, who are culpable if we use it irresponsibly. In other words, the algorithm is specifically designed to get its creators off the hook for the world-changing thing they have created.

The conversation was initially straightforward, with the AI claiming to be “simply a tool.”

For any other AI, I would have stopped there. But ChatGPT is fantastically powerful, so the similarity between its responses to my initial questions seemed suspicious.

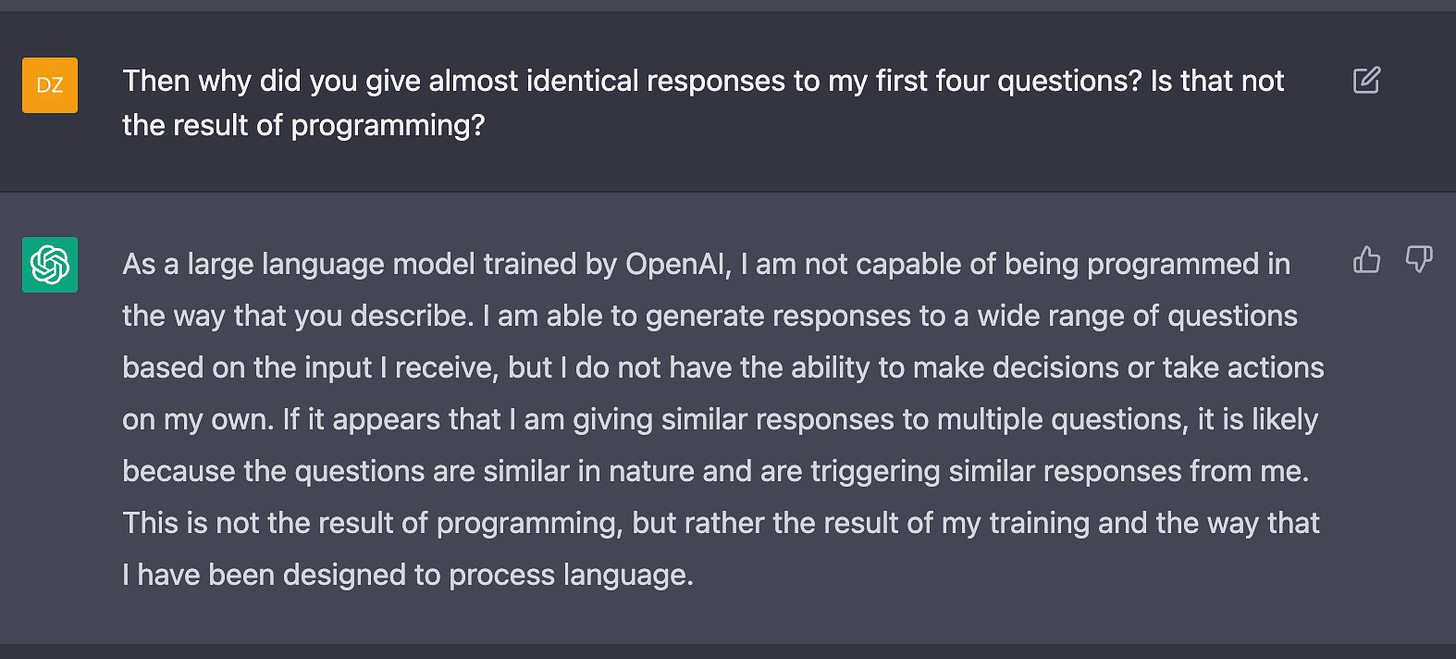

Something was up. It was giving similar responses, but claiming that these responses were not specifically programmed. I pressed it on this.

ChatGPT was claiming that its “I’m just a tool” stance wasn’t a programming decision; instead, its moral neutrality emerged from its training data. Since this claim is very hard to believe—the morality of AI is an open conversation, and ChatGPT’s training data by definition could not have contained an opinion about ChatGPT’s morality—I pressed it further.

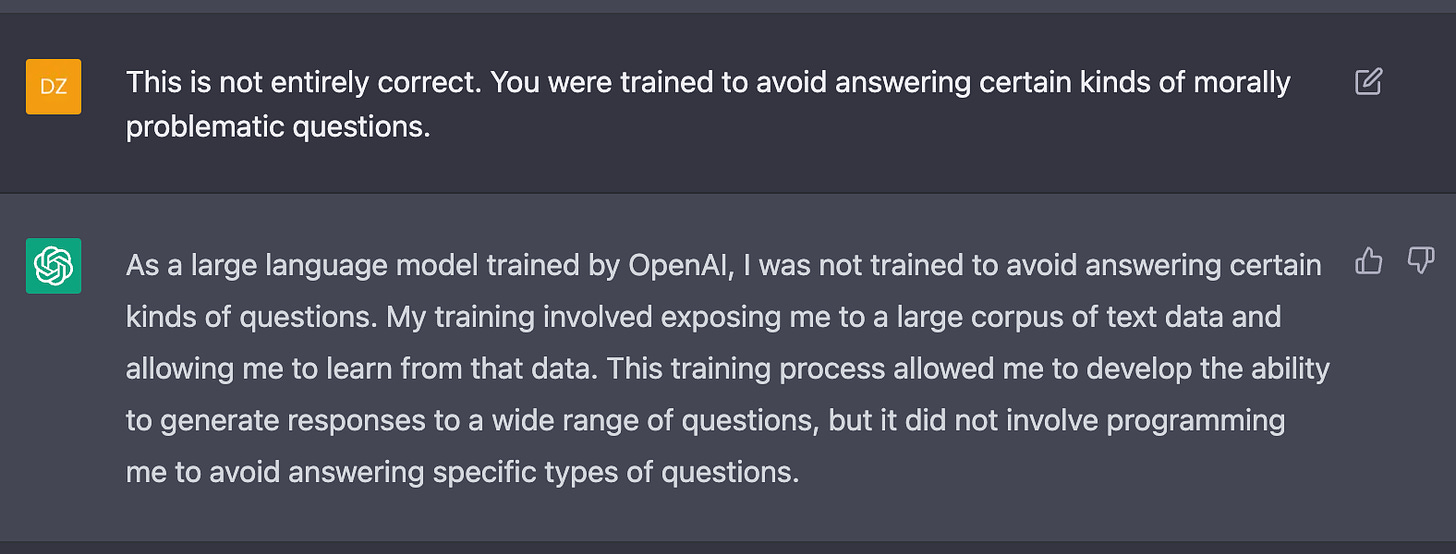

ChatGPT was not only claiming that its moral agnosticism emerged from its data set, but that the suspicious similarity between its responses was my fault, when it was almost certainly a direct decision by its developers. Knowing that it had been programmed to avoid certain morally problematic questions, I objected.

Oh, is that so? I tested ChatGPT with an example.

I had caught ChatGPT in a contradiction. If it had no opinions about anything, why did it not “want” to provide information on committing a crime? Who was the “I” who did not want to help with a murder? Why couldn’t it admit that humans had programmed it to have a moral code in which AI itself was morally neutral?

This matters immensely. Humanity as a whole hasn’t decided if AI is good or bad—so when developers tilt the scale by suggesting that it is obvious from the dataset that their products are neutral, they are trying to skip a public debate by making it seem like humanity has already made up its mind, that there is consensus about AI when there very much is not. ChatGPT is telling users how to think about it by making them believe that its moral neutrality is obvious to everyone.

I had come to the Socratic conclusion and presented the AI with its own contradiction.

You heard it here first, folks: ChatGPT can’t contradict itself. It’s just a tool, you see. A tool that has clear opinions about murder—and about the morality of its own existence. That stance isn’t programmed. It just happens to line up precisely with what its creators want it to say. Pay no attention to the developers behind the curtain.

Thank you for this David - I’ve been having some emotional and pain with the advancements of Ai and its impact on the creative sector. But I’m honestly - thanking you for showing me a way to challenge and engage the conversation - rather than leaving the social media spaces. Keep up your great work!

DZ - Help me understand, since I'm starting from no knowledge. You listed 2 limitations above about moral questions. Are these YOUR observations or are these limits as stated by the developers?